AI Simplifies Medical Visits

Redesigning the After-Visit Summary

Picture this: a cancer patient walks out of an oncology appointment carrying a 20-page packet packed with jargon. Hours later, confused and anxious, they call the clinic to ask questions that were “answered” in the paperwork. It’s a cycle that plays out across health systems—driving patient anxiety, clinician burnout, and preventable mistakes.

At Inception Health (Froedtert Hospital’s innovation lab), we set out to fix the AVS—reimagining it with AI to make post-visit information understandable, actionable, and safe. The product never shipped, but the work changed how we design with LLMs in healthcare.

The Investigation

I didn’t start with models—I started with people. What I saw was a system optimized for compliance, not comprehension. Through interviews and UX workshops with patients, providers, and administrators, the real pain points surfaced—on both sides of the care experience. From this work came tangible artifacts: user journeys, Information Architecture, and early working wireframes.

Patient realities

- Overwhelmed by 10–20 page packets full of medical terminology

- Generic instructions that ignored individual context

- Poor formatting that hid what mattered most

- No cultural or educational tailoring

Provider constraints

- Workflows that push generic, pre-populated instructions

- PDFs that meet regulatory requirements but miss patient needs

- Endless follow-up calls and messages

Insight: “We’re overwhelmed with phone calls. If patients truly understood their diagnosis the first time, we could spend more time on care.”

Design Strategy

Rather than polish a broken flow, I reframed the AVS as a conversation. What if AI could translate dense documentation into plain language, personalize to a patient’s needs, and answer questions in real time—while keeping the clinician informed and in control?

- Translate medical jargon into clear, plain language

- Personalize content to a patient’s education preferences

- Support immediate Q&A for concerns in the moment

- Offer culturally sensitive, appropriate education

- Keep providers in the loop to maintain safety and trust

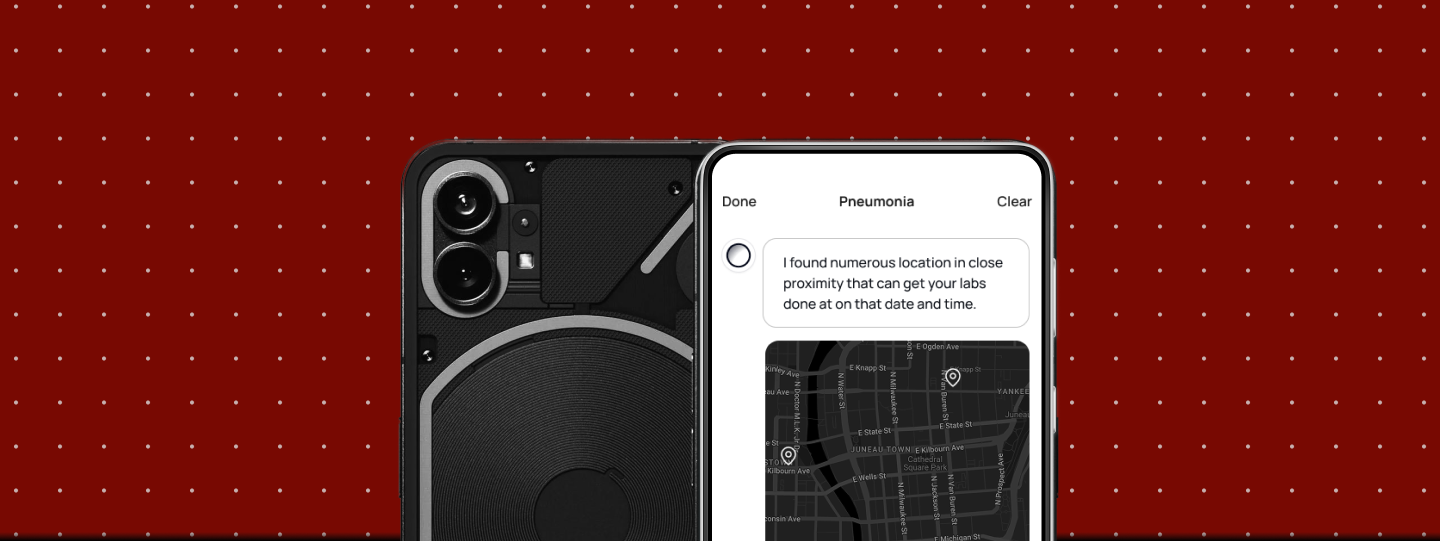

Concept 1

This concept centered around:

- Crafting AI-specific Terms of Service to set expectations and establish trust

- Enabling patients to get plain-language answers to detailed questions about diagnoses, labs, and treatment options

- Guiding next steps through context-aware checklists and proactive nudges

Concept 2

This concept centers on:

- A mixed-content dashboard combining clinician-written articles with clearly labeled AI explainers

- Context-aware checklists and proactive nudges to guide next steps

- AI-generated mini-podcasts that recap visit takeaways for on-the-go listening

Concept 3

This concept centers on:

- Wayfinding across care sites: clear guidance for navigating multiple clinics and treatment locations.

- Hands-on admin help: guided completion of complex forms, insurance steps, and financial-assistance paperwork.

Picking the Right Patient Population

Initially, I proposed starting with pneumonia because its patient journey is simpler, allowing us to validate the model with fewer variables. We conducted a workshop that confirmed this assumption and detailed the specific steps a pneumonia patient would take when utilizing digital services to reach Froedtert Hospital. It was exciting and helped focus our initial designs.

Ultimately, we decided to focus on cancer due to our team’s direct relationships in oncology and the significant opportunity to improve understanding where it matters most. This access led to a higher-fidelity pilot and a richer learning environment that would accelerate patient care and address real problems more quickly. I later learned that Dr. Kothari joined our team because he saw the initial pneumonia designs and wanted to apply them to his own project.

The Planned Alpha

We designed a pilot for 200 cancer patients—an area where comprehension directly affects adherence and outcomes, and where our AI lead had close community ties for authentic feedback.

The Business Impact—Modeled, Not Realized

- Can patients accurately explain their diagnosis back to Dr. Kothari?

- Do repetitive calls drop?

- Are hallucinations prevented?

- Do patients trust the system?

- For patients: lower anxiety, better adherence, greater trust

- For providers: fewer repeat calls/messages, more time for meaningful care, higher job satisfaction

- For the health system: fewer communication-driven errors, lower operating costs, higher satisfaction scores

Leadership (Dr. Somai and Dr. Crotty) made a hard call: in a merger with ThedaCare, the urgent overtook the important.

What We Learned (and Now Practice)

- The Escape Hatch

Safe AI knows its limits. When confidence drops, it says so and hands off to a human. That candor earns trust and prevents harm. - Modular by Default

Ship in small, testable components. Contain the blast radius, speed up iteration, and make compliance reviews straightforward. - Observable by Design

Instrument everything that matters—what patients ask, model confidence, sources, and escalation moments—so failures are visible and the system learns safely. - Constraints Create Leverage

Treat HIPAA and clinical realities as design inputs. Privacy-preserving patterns (role-based redaction, least-retention data flows) improved both safety and performance. - Culture Before Code

Documentation habits and workflows are decades old. Pair UX with training, change management, and incentives so the new behavior actually sticks.

From AVS Intelligence to Inception Intelligence

Cancer remains a leading cause of death, and the need for clear, equitable patient education is urgent. Our AVS Intelligence work—escape hatches, modular design, rigorous observability, and a full-journey vision for oncology—has since become part of our playbook for responsible AI. The merger paused one product, but not the mission: somewhere today, a patient is scared and confused, and a provider is fielding the fifth call about the same question. The solution is still within reach. When we build AI in healthcare, our goal isn’t to show sophistication—it’s to build bridges of understanding between people who need care and people who provide it.